Transform matrices are confusing! There, I said it. If you’ve never taken the time to play around with transform matrices in MRI, I completely understand. That said, it’s not a bad idea to get some exposure to the world of warping and the matrices that they often depend on. First of all, there are different types of transforms. We tend to measure them in terms of how many parameters are allowed to vary (called Degrees of Freedom).

Linear Transforms

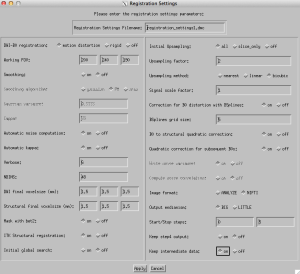

In AFNI, one of the most common transforms is a linear affine transform, which has 12 degrees of freedom. There are a few AFNI programs for performing affine transforms, depending on what transform you are interested in computing. If you wish to align the EPI (functional) runs to the anatomical dataset, you can use 3dAllineate or align_epi_anat.py (which calls 3dAllineate after some processing steps). I recommend align_epi_anat.py since it gives me better registrations with default options than just calling 3dAllineate.

align_epi_anat.py \ -anat anat+orig. \ -epi epi+orig. \ -epi_base 0 \ -epi2anat \ -giant_move

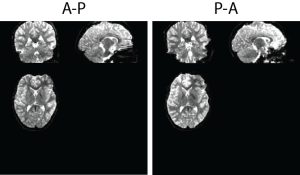

If you wanted to do an affine transform to standard space (TLRC or MNI), you can use @auto_tlrc, 3dAllineate, or align_epi_anat.py. I recommend @auto_tlrc, as it gives me the best ANAT–>TLRC/MNI transformations.

@auto_tlrc -base TT_N27+tlrc. -input anat+orig

If we knew that our data often had very different CENTER points, measured by @Center_Distance, we could modify the above command to automatically align the centers of the dataset before beginning the transform via the init_xform option!

@auto_tlrc -base TT_N27+tlrc. -input anat+orig -init_xform CENTER

Using align_epi_anat.py and @auto_tlrc are also the defaults if you use afni_proc.py with the align and tlrc blocks. And if you aren’t currently using afni_proc.py, I would highly recommend looking into it!

Nonlinear Transforms

In addition to linear transforms, AFNI “recently” (read: in 2013) added a new program for performing nonlinear warps (3dQwarp). There is also a python wrapper script (auto_align.py), which will pair an affine transform and the nonlinear transform, similar to SPM’s DARTEL transform (J. Ashburner. A Fast Diffeomorphic Image Registration Algorithm. NeuroImage, 38(1):95-113, 2007.). I highly recommend using auto_align.py for your nonlinear transform needs.

One important note is that you can call 3dQwarp with an -allineate option which will use 3dAllineate to do a linear affine transform prior to doing the nonlinear warping step. Here’s where things start to get a bit tricky! If you use 3dQwarp with the -allineate option, then the output transformations are automatically concatenated for you! That means you get to skip the arguably “yucky” stuff that I’m going to talk about below. However, if you use auto_warp.py, you will benefit from a lot of automatic options being applied (that likely will help your registration), at the cost of then having to concatenate your transforms! But it’s really not that bad!

Concatenating and Applying Transforms

Why concatenate your transforms together when you could just apply them separately? Well it’s usually a good idea to perform as few warping operations on your data as possible, so that you only resample (interpolate) your data a single time. To combine these affine and nonlinear warps is fairly straightforward by following a of steps:

1. Setup initial warp of original subject data to TT_N27 template:

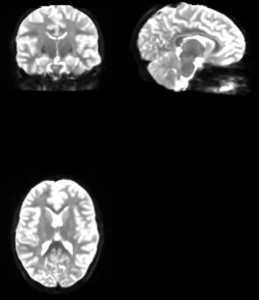

auto_warp.py -base TT_N27+tlrc -input anat+orig -skull_strip_input yes

Even though auto_warp.py has given us the necessary files, as an exercise here you can try applying the matrices/warps to recreate the process! Here we apply the combined affine and nonlinear warps on the original (skull-stripped) anatomical in order to warp to TT_N27:

3dNwarpApply -master TT_N27+tlrc \ -source subject_anatomical+orig \ -nwarp 'awpy/anat.un.aff.qw_WARP.nii anat.un.aff.Xat.1D' \ -prefix subject_anatomical_MNI

If you wanted to warp your EPI data to include the motion parameters, EPI–>ANAT transformation, and ANAT–>MNI you could concatenate all of them together to give you a single interpolation. More on that later (though you can read ahead and look at how afni_proc.py does it!).

Is interpolation really that bad?

Some people think so. But it happens one way or another! You’re interpolating during slice time correction, motion correction, any type of transform, and possibly during other mathematical operations (like smoothing) depending. The best you can do is try to reduce the number of interpolations, which afni_proc.py does for you.

Where do we go from here?

I recommend reading the cat_matvec help as well as the help for 3dAllineate and 3dQwarp. Though likely you’ll be applying the transforms using 3dNwarpApply, which has a new option -iwarp, which will invert the transform of the warps supplied to it!